Underdeveloped Artificial Intelligence (AI) tools have raised hundreds of thousands offalse red flags over online child pornography in Karnataka, cybercrime investigators and experts say.

In numerous cases, pictures and videos of children playing naked outside homes or bathing on beaches have been flagged as child pornography.

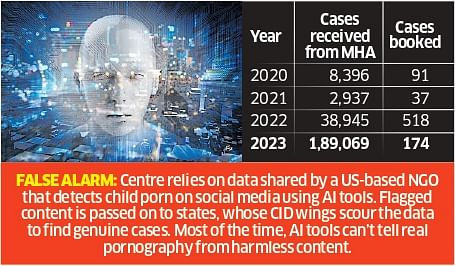

Between 2019-2020 and 2022-23, the state’s Criminal Investigation Department (CID) received2.39 lakh tips about “child pornography” through Cyber Tipline, which works under the National Crime Records Bureau (NCRB). But only 820 of them, or a minuscule 0.34%, fell within the definition of child pornography, data reviewed by DH shows.

The Ministry of Home Affairs relies on data shared by theNational Center for Missing and Exploited Children, a US-based NGO, to detect child pornography on social media and other online platforms. The nonprofit uses AI tools deployed by social media platforms to filter the data.

The ministry sends this data to Cyber Tipline, which passes it on to states.

In Karnataka, a dedicated wing in the CID manually scours the data to detect content that can be classified as child pornography. Of the1.89 lakh tips received in 2022-23, only 174 could be classified as genuine cases of child pornography.

Cybercrime investigators and experts are unanimous that AI tools need large-scale improvements to be effective in detecting child pornography.

A CID official was blunt in his assessment of what’s behind the “huge” number of false red flags. “For the most part, these AI tools cannot tell real child pornography from harmless content,” he tellsDH.

Experts in Machine Learning, Data Structures and Algorithms say different social platforms use different AI tools. The technology to detect such content is still in its nascent stage, hence the discrepancies, they add.

Prof Utkarsh Mahadeo Khaire from the Indian Institute of Information Technology, Dharwad, says AI tools cannot be effective unless they are fed large amounts ofaccurately tagged and processed data. “This may sound easy butthe existence of vast elements in data makes it a complex job,” he tellsDH.

Khaire says each dataset contains a plethora of elements, all of which have to be tagged separately. “The tool should be fed ample data with tagged elements to make it familiar with the content,” he explains. “That’s an intricate task.”

If there is no ample data about any tagged element, the tool will simply fail to recognise it. “To achieve accuracy, you need large amounts of correctly classified data as well as human intervention,” Khaire says.

According to another professor at IIIT Dharwad,one more reason for these discrepancies is that some elements are always left out during feeding and specific tagging. “In many cases, accuracy is impossible because not much data is available on such left-out data.”

The professor believes all the existing AI tools arenarrow and can do only specific tasks. It’s unlikely that these fields will be fully automated anytime soon, he adds.

The existing AI tools are not programmedto recognise social setups either, according to Khaire. “For instance, if we share any video or audio of a play about child pornography, AI will detect it as inappropriate. AI still has a long way to go before it’s able to accurately read social setups,” he says.

Cultural barriers

Cultural barriers are another major hurdle. The interpretation of datacollated by social media companies will vary from one country to another because of cultural and social differences, an investigator at the CID’s cybercrime cell notes.

In rural India, for instance, parents sometimes don’t fully clothe their toddlers and film them as they play. These pictures and videos are often shared on social media. “This may be unacceptable in other cultures. Unless it’s properly tagged, such content will be flagged as child pornography,” he adds.

(Published 17 December 2023, 22:11 IST)